Machine Learning Project: Predicting Student Drop-Out

Keywords: EDA, feature engineering, logistic regression, support vector machine, decision tree

Context

This case study uses four data sources. They are Financial Aid data, Static (demographic) data, and Progress data (which include student's GPA for each term), and finally a training set with studentID and labels of whether the student has dropped out. The goal of this case study is to use logistic regression, svm, and decision tree/ random forest to predict whehter or not a student will drop out and find a model with highest accuracy. In doing so, we can find out the best predicators that can explain student attrition. The dataset comes from Bill and Melinda Gates Foundation.

The full description and codes of this project can be find here.

Team

I worked independently on this project.

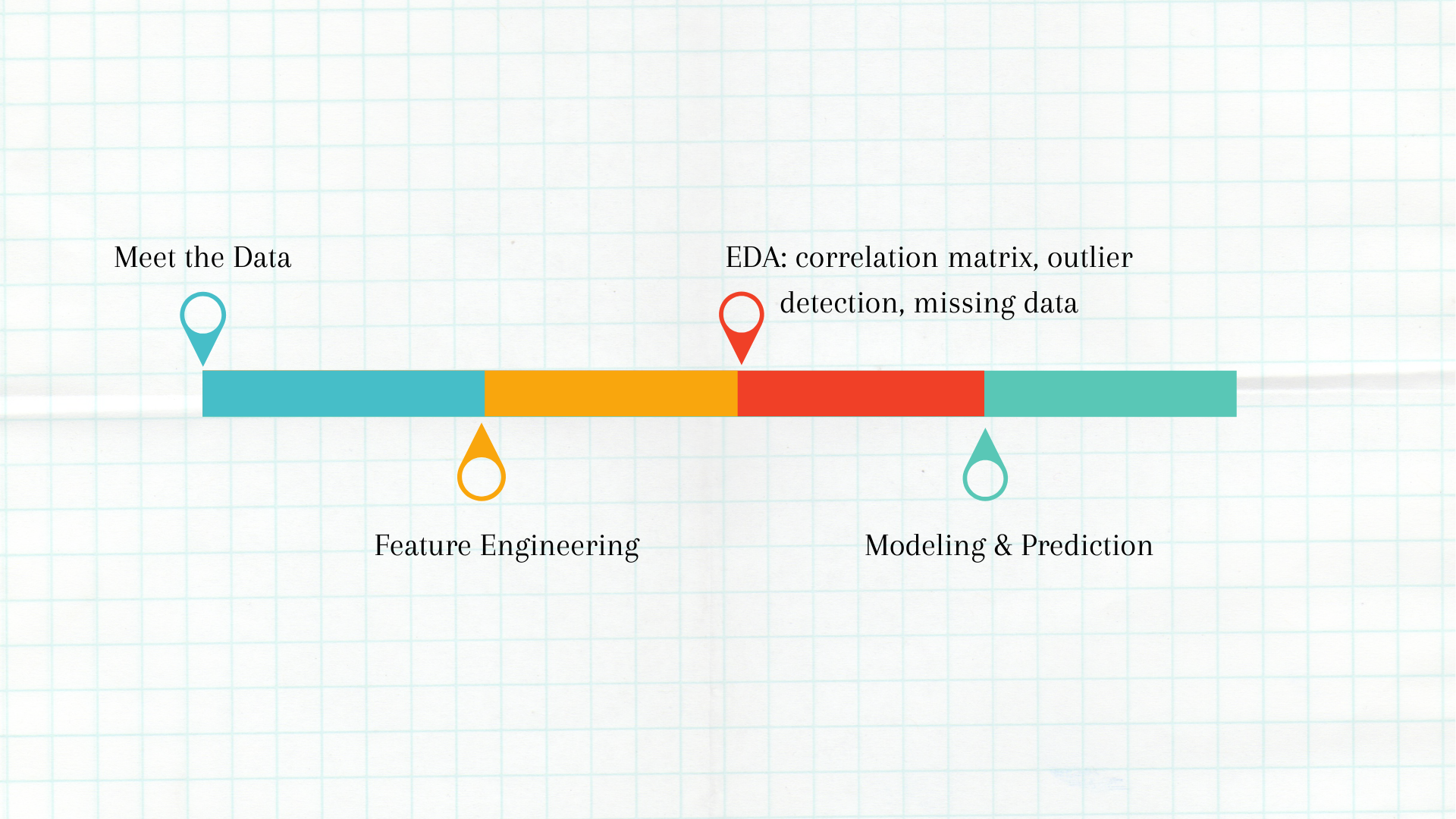

Project Workflow

Results

In this case study, I have examined 39 features and their importance in three models, logistic regression, support vector machines, and Random Forest.

Currently, the model with the highest accuracy is Random Forest, which has an accuracy score of 0.87. The best predicators from this model selected by the Random Forest feature selection method method are:

Parent_AGI_Imp, RegistrationYear, EntryAge_Imp, Final_Cum_GPA, Terms, Start_Term_GPA, Final_Term_GPA, GPA_Growth, First_Year_GPA.Limitations

However, there are still a lot of rooms for improvement for the models. I will note here a few points that should be addressed to improve model accuracy:

I found out in the third round of iteration that there are issues with the GPA variables that I engineered from the student progress data. Namely, there are 573 records in my dataset that have 0 Final_Term_GPA, 0 Start_Term_GPA and 0 Final_Term_GPA. This is because in the original dataset, there are records where the student's term GPA and final GPA are 0. This creates a problem for my variable Final_Cum_GPA because when I engineer this feature, I used the last cum_GPA to calculate a student's final_cum_GPA. When students in my dataset have non-zero and non-nan grades in earlier terms but zeros in the last term, the Final_Term_GPA will be calculated as 0 in this case, which is not correct. Because of time constraint, in my current data, I dropped all the 573 records that have 0s for Final_Term_GPA, 0 Start_Term_GPA and 0 Final_Term_GPA. But in doing so, I have also dropped the records where there should be a valid Final_Cum_GPA and Final_Term_GPA instead of 0. To solve this problem, I need to go back to look at if my hypothesis is correct. Related to this is the fact that there might be a data leakage issue because of the 0 GPAs I've discovered in the original dataset, I have a feeling that this might indicate the student has dropped out in that semester although the data dictionary has not indicated what a zero Term_GPA or Cum_GPA means. But if my hypthesis is true, that also might mean that I can engineer a feature similar to Dropout from just the zero GPA records.

Another thing that I can do to improve the model performance is to scale all the numerical features since right now I have only scaled the numerical variable Parent_AGI_Imp. I'm also curious to see whether AGI actually would have an effect on dropout althought in my exploratory data analysis, I have decided to leave out this variable because the variable seems not indicate much about student attrition, but on the save side, I can add in this feature to see how the model performs.

Related to this is more feature engineering tasks that I can do such as changing the RegistrationYear variable to categories since right now I have not changed the variable (i.e. currently it is 2011, 2012, 2013) and it obviously won't add much to the prediction as a numerical variable.

There are also more refined approaches that I am considering to take about detecting and removing outliers. I tried the IQR method, which didn't work well in my data. To improve model performance, I will find better method to detect and remove outliers.

Also, I've read from scholarships that some important predicators for student attrition are High School Achievement Variables such as High School GPA and SAT score, Parent education, and first year college GPA. For the dataset, currently, I have features on parent education and first year college GPA but unfortunately, I cannot use the dataset's high school unweighted GPA because about 70% of the values are missing for this variable. As such, I imagine that even if I can do the above four things, it would be difficult to improve the model beyond some threshold because one important variable that might explain a lot about the variability (classification in this case) of student attrition is missing in our data.